SPRAC21A June 2016 – June 2019 OMAP-L132 , OMAP-L138 , TDA2EG-17 , TDA2HG , TDA2SG , TDA2SX , TDA3LA , TDA3LX , TDA3MA , TDA3MD , TDA3MV

-

TDA2xx and TDA2ex Performance

- Trademarks

- 1 SoC Overview

- 2 Cortex-A15

- 3 System Enhanced Direct Memory Access (System EDMA)

- 4 DSP Subsystem EDMA

- 5 Embedded Vision Engine (EVE) Subsystem EDMA

- 6 DSP CPU

- 7 Cortex-M4 (IPU)

- 8 USB IP

- 9 PCIe IP

- 10 IVA-HD IP

- 11 MMC IP

- 12 SATA IP

- 13 GMAC IP

- 14 GPMC IP

- 15 QSPI IP

- 16 Standard Benchmarks

- 17

Error Checking and Correction (ECC)

- 17.1 OCMC ECC Programming

- 17.2 EMIF ECC Programming

- 17.3 EMIF ECC Programming to Starterware Code Mapping

- 17.4 Careabouts of Using EMIF ECC

- 17.5 Impact of ECC on Performance

- 18 DDR3 Interleaved vs Non-Interleaved

- 19 DDR3 vs DDR2 Performance

- 20 Boot Time Profile

- 21 L3 Statistics Collector Programming Model

- 22 Reference

- Revision History

1.4.3 Initiator Priority

Certain initiators in the system can generate MFLAG signals that provide higher priority to the data traffic initiated by them. The modules that can generate the MFLAG dynamically are VIP, DSS, EVE, and DSP. Following is a brief discussion of the DSS MFLAG.

- DSS MFLAG

- DSS has four display read pipes (Graphics , Vid1, Vid2, and Vid3) and one write pipe (WB).

- DSS drives MFLAG if any of the read pipes are made high priority and FIFO levels are below low threshold for high-priority display pipe.

- VIDx have 32 KB FIFO and GFX has 16 KB FIFO.

- FIFO threshold is measured in terms of 16-byte word.

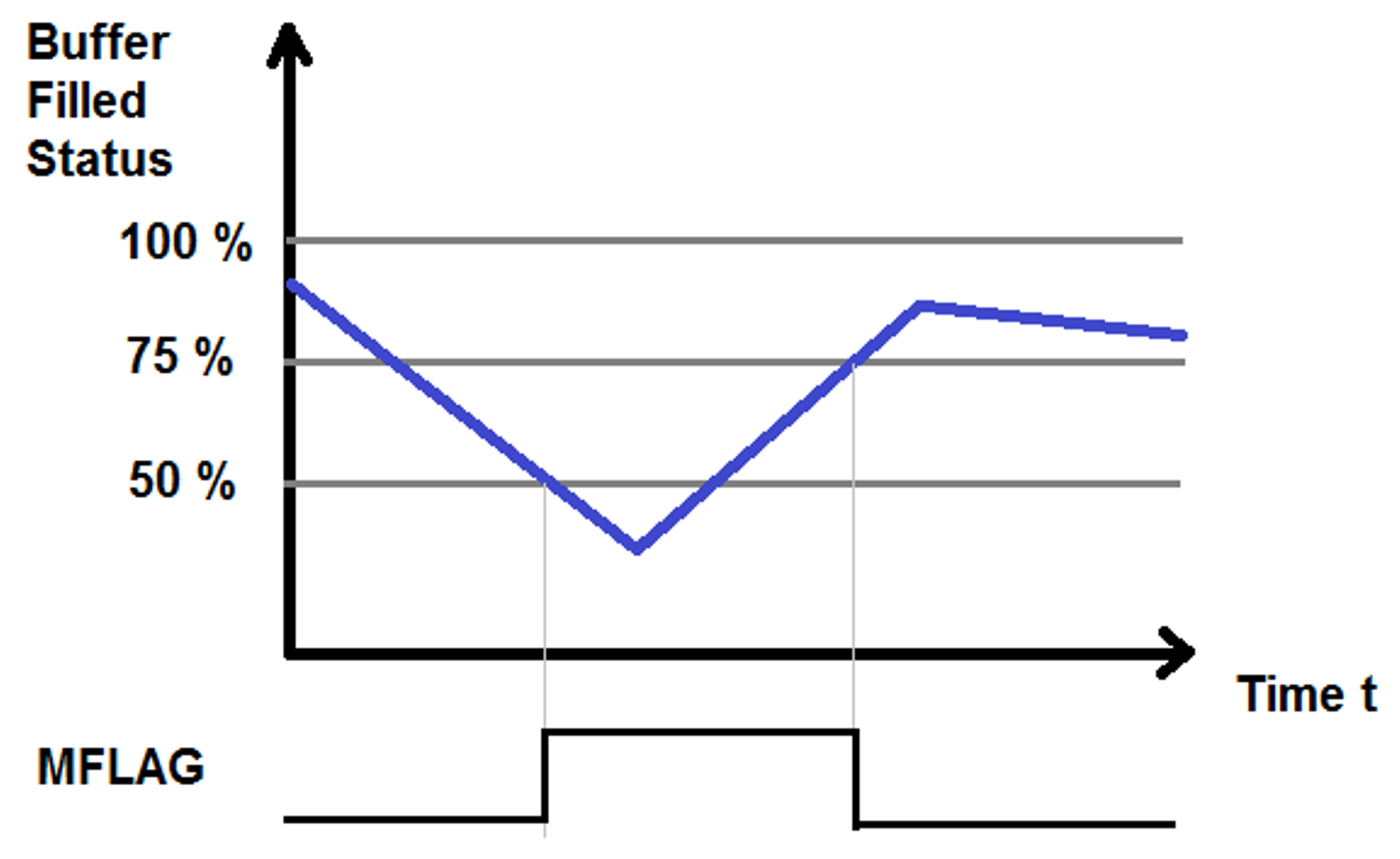

- Recommended settings for high and low threshold are 75% and 50%, respectively.

- MFLAG can be driven high permanently through a force MFLAG configuration of the DISPC_GLOBAL_MFLAG_ATTRIBUTE register.

- DSP EDMA + MDMA

- EVTOUT[31] and EVTOUT[30] are used for generation of MFLAGs dedicated to the DSP MDMA and EDMA ports, respectively.

- EVTOUT[31/30] = 1 → Corresponding MFLAG is high.

- EVE TC0/TC1

- For EVE port 1 and port 2 (EVE TC0 and TC1), MFlag is driven by evex_gpout[63] and evex_gpout[62], respectively.

- evex_gpout[63] is connected to DMM_P1 and EMIF.

- evex_gpout[62] is connected to DMM_P2 and EMIF.

- VIP/VPE

- In the VIP/VPE Data Packet Descriptor Word 3, can set the priority in [11:9] bits.

- This value is mapped to OCP Reqinfo bits.

- 0x0 = Highest Priority, 0x7 = Lowest Priority.

- VIP Has Dynamic MFLAG specific scheme based on internal FIFO status

- Based on HW set margins to overflow/underflow

- Enabled by default, no MMR control

Many other IPs have their MFLAG driving mechanism via the control module registers.

The behavior of setting the MFLAG dynamically can be realized using Figure 4.

Figure 4. TDA2xx and TDA2ex DSS Adaptive MFLAG Illustration

Figure 4. TDA2xx and TDA2ex DSS Adaptive MFLAG Illustration The programming model used to enable dynamic MFLAG is:

Enable MFlag Generation DISPC_GLOBAL_MFLAG_ATTRIBUTE

DISPC_GLOBAL_MFLAG_ATTRIBUTE = 0x2;

Set Video Pipe as High Priority DISPC_VIDx_ATTRIBUTES

DISPC_VID1_ATTRIBUTES | = (1<<23);

DISPC_VID2_ATTRIBUTES | = (1<<23);

DISPC_VID3_ATTRIBUTES | = (1<<23);

Set Graphics Pipe as High Priority DISPC_GFX_ATTRIBUTES

DISPC_GFX_ATTRIBUTES | = (1<<14);

GFX threshold 75 % HT , 50 % LT

DISPC_GFX_MFLAG_THRESHOLD = 0x03000200;

VIDx threshold 75 % HT , 50 % LT

DISPC_VID1_MFLAG_THRESHOLD = 0x06000400;

DISPC_VID2_MFLAG_THRESHOLD = 0x06000400;

DISPC_VID3_MFLAG_THRESHOLD = 0x06000400;

The CTRL_CORE_L3_INITIATOR_PRESSURE_1 to CTRL_CORE_L3_INITIATOR_PRESSURE_4 registers are used for controlling the priority of certain initiators on the L3_MAIN.

- 0x3 = Highest Priority/Pressure

- 0x0 = Lowest Priority/Pressure

- Valid for MPU, DSP1, DSP2, IPU1, PRUSS1, GPU P1, GPU P2

There are SDRAM initiator priorities that control the priority of each initiator accessing two EMIFs. The CTRL_CORE_EMIF_INITIATOR_PRIORITY_1 to CTRL_CORE_EMIF_INITIATOR_PRIORITY_6 registers are intended to control the priority of each initiator accessing the two EMIFs. Each 3-bit field in these registers is associated only with one initiator. Setting this bit field to 0x0 means that the corresponding initiator has a highest priority over the others and setting the bit field to 0x7 is for lowest priority. This feature is useful in case of concurrent access to the external SDRAM from several initiators.

In the context of TDA2xx and TDA2ex, the CTRL_CORE_EMIF_INITIATOR_PRIORITY_1 to CTRL_CORE_EMIF_INITIATOR_PRIORITY_6 are overridden by the DMM PEG Priority and, hence, it is recommended to set the DMM PEG priority instead of the Control module EMIF_INITIATOR_PRIORITY registers.

The MFLAG influences the priority of the Traffic packets at multiple stages:

- At the interconnect level, the NTTP packet is configured with one bit of pressure. This bit, when set to 1, gives priority to the concerned packet across all arbitration points. This bit is set to 0 for all masters. The pressure bit can be set to 1 either using the bandwidth regulators (within L3) or can be directly driven by masters using OCP MFlag. MFLAG asserted pressure is embedded in the packet while pressure from the BW regulator is a handshake signal b/w the BW regulator and the switch.

- At the DMM level, the MFLAG is used to drive the DMM Emergency mechanism. At the DMM, the initiators with MFLAG set will be classified as higher priority. A weighted round-robin algorithm is used for arbitration between high priority and other initiators. Set DMM_EMERGENCY[0] to run this arbitration scheme. The weight is set in the DMM_EMERGENCY[20:16] WEIGHT field.

- At the EMIF level, the MFLAG from all of the system initiators are ORed to have higher priority to the system traffic versus the MPU traffic when any system initiator has the MFLAG set.