SSZTCZ0 August 2023 TDA4AH-Q1 , TDA4AP-Q1 , TDA4APE-Q1 , TDA4VH-Q1 , TDA4VP-Q1 , TDA4VPE-Q1 , TPS6594-Q1

As we drive through our neighborhoods and towns and see children walking and riding bikes, we’re reminded of the importance of safety on our roads. A 2021 study by the NHTSA revealed that in the United States, on average, 20 pedestrians were killed in traffic accidents each day – one every 71 minutes. In a 2022 study, the World Health Organization determined that 1.3 million people die each year as a result of road traffic accidents, and more than half of those deaths are among pedestrians, cyclists and motorcyclists. Unfortunately, driver distraction is one of the biggest contributors to these accidents, and our tendency to be distracted seems to increase each year.

Advanced driver assistance systems (ADAS) help mitigate the impact of distraction to protect drivers, pedestrians and vulnerable road users. And with the need to add backup cameras, front-facing cameras and driver-monitoring systems in order to achieve five-star safety ratings and meet regulatory deadlines, many manufacturers are evolving their vehicle architectures to aggregate active safety functions in ADAS domain controllers.

Domain controllers typically require:

- The ability to interface with multiple sensors: quantity, modality and resolutions.

- Vision, artificial intelligence (AI) and general-purpose processing for perception and driving and parking applications.

- Connectivity to low-bandwidth and high-speed vehicle networks.

- Functional safety and security to prevent the corruption of critical operations.

Processing and system requirements in ADAS domain controllers

Increasing demands for system memory, computing performance and input/output (I/O) bandwidth are complicating system designs and raising system costs. Today’s higher-end ADAS systems use multiple cameras of different resolutions, with a variety of radar sensors around the car to provide a complete view of the driving environment. For each set of images collected from the sensors, AI and computer vision-enabled detection and classification algorithms need to run at high frame rates per second to accurately interpret the scene. This presents challenges for system and software designers, including interfacing these sensors to the processing system, transferring their contents into memory, and synchronizing the data for the classification algorithms to process in real time.

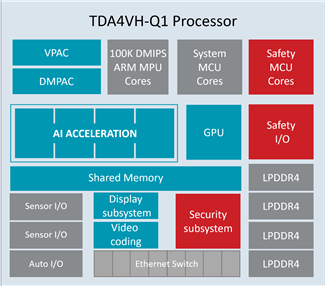

TI’s TDA4VH-Q1 system-on-a-chip (SoC), shown in Figure 1, integrates functions such as vision pre-processing, depth and motion acceleration, AI network processing, automotive network interfaces and a safety microcontroller (MCU). Optimized to power the TDA4VH-Q1 in applications that need to meet Automotive Safety Integrity Level (ASIL) D, the TPS6594-Q1 power-management integrated circuit includes functional safety features such as voltage monitoring, hardware error detection for the TDA4VH-Q1 SoC, and a Q&A watchdog that monitors the MCU on the SoC for software errors that cause lockup.

Figure 1 Simplified diagram of the

TDA4VH-Q1 SoC

Figure 1 Simplified diagram of the

TDA4VH-Q1 SoCEnabling multicamera vision perception

One example of an ADAS application that requires increased processor performance is multicamera vision perception. Placing cameras around the car provides 360-degree visibility to help prevent head-on collisions, as well as helping drivers remain alert to traffic and pedestrian activity in blind spots and adjacent lanes.

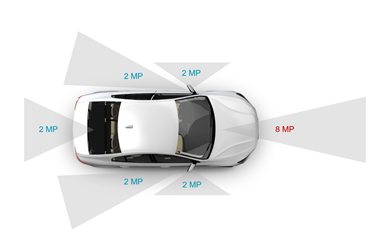

Using TI’s J784S4 processor open-source software development kit (SDK), Phantom AI has developed a multicamera vision perception system for the TDA4VH-Q1. Phantom AI’s PhantomVision™ system offers a suite of ADAS features for the TDA4VH-Q1 processor, ranging from European Union General Safety Regulation compliance up to Society of Automotive Engineers (SAE) Level L2 and Level L2+. Alongside the base functions – vehicle, vulnerable road user, free space, traffic sign and traffic-light detection – PhantomVision™ includes additional functions such as construction zone, turn signal and tail light detection and AI-based ego-path prediction. Their multicamera perception system covers a 360-degree view from the vehicle in a combination of front, side and rearview cameras, and helps eliminates blind spots (Figure 2).

Figure 2 Camera placement for the

360-degree view used by Phantom AI

Figure 2 Camera placement for the

360-degree view used by Phantom AIPhantom AI makes real-time operation possible by using the TDA4VH-Q1’s combination of high-performance computing, deep learning engines and dedicated accelerators for signal and image pre-processing. A dedicated vision pre-processing accelerator handles a camera pipeline including image capture, color-space conversion and the building of multiscale image pyramids. Together with TI’s deep learning library, the TDA4VH-Q1’s high teraoperations-per-second multicore digital signal processor plus matrix multiply assist engine provides efficient neural networks with fast algorithms and minimal I/O operation scheduling, resulting in high accuracy and low latency. In this video, you can see the ADAS features of the PhantomVision™ system using the TDA4VH-Q1 processor.

Conclusion

Building sophisticated multi-sensor ADAS systems for SAE Level L2 and Level L2+ driving doesn’t require a water-cooled supercomputer. Our work together shows that with a well-designed SoC like TI’s TDA4VH-Q1 in the hands of expert automotive engineers like the team at Phantom AI, compelling, cost effective systems that meet functional safety requirements can be brought to market. While we can be enthusiastic for the future of autonomy, the true goal of designing cost-effective systems that meet functional safety requirements is that they will help make our world safer. Making ADAS technology accessible to more segments of the automotive market – more ADAS in more cars – enables drivers and pedestrians a better, safer experience.

Additional resources

- Order the TDA4VH-Q1 evaluation module.

- Watch the “Jacinto™ Processors in Automotive Applications” video training series.